Introduction

This project is an Industry Track collaboration with Ember Robotics to design and implement a vision-guided pick-and-place system using a Techman robotic arm. Our goal is to manipulate fragile glass lab slides with high precision, which requires robust object detection, accurate motion planning, and precise actuation. This work addresses critical challenges in places such as laboratory environments or chemical handling manufacturing lines, where current workflows suffer from:

Manual & Inefficient

Current slide handling workflow requires manual intervention

Contamination Risks

Potential contamination risks that affect experiment results

Our Goal

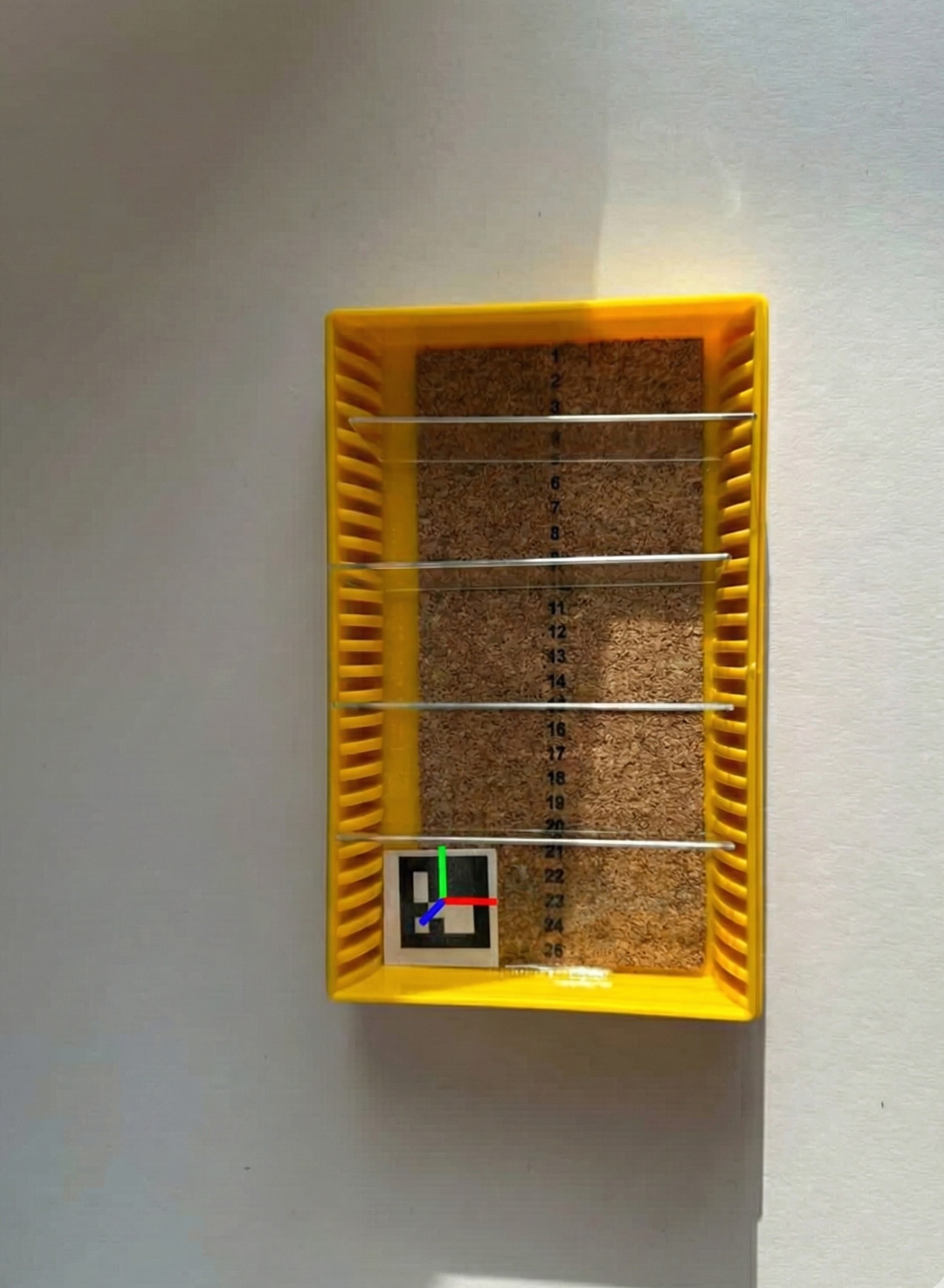

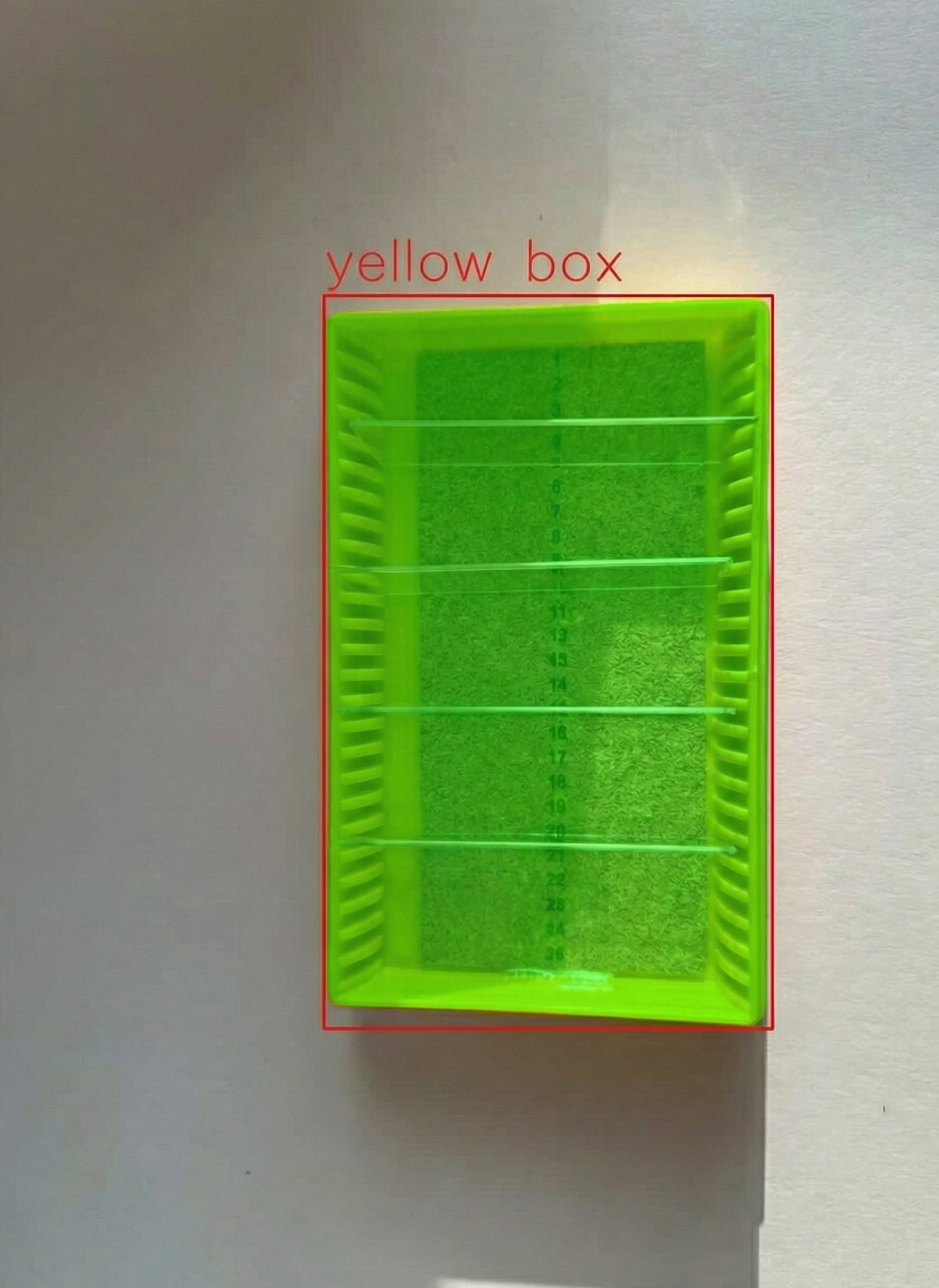

We designed, built, and tested a vision-guided pick-and-place system using a Techman TM12 robotic arm that can manipulate and relocate lab glass slides with high precision. For successful task execution, the robot detects the position and orientation of each slide, picks it from a source tray, and places it accurately onto a target rack. This project tackles several interesting technical problems that make automated slide manipulation difficult:

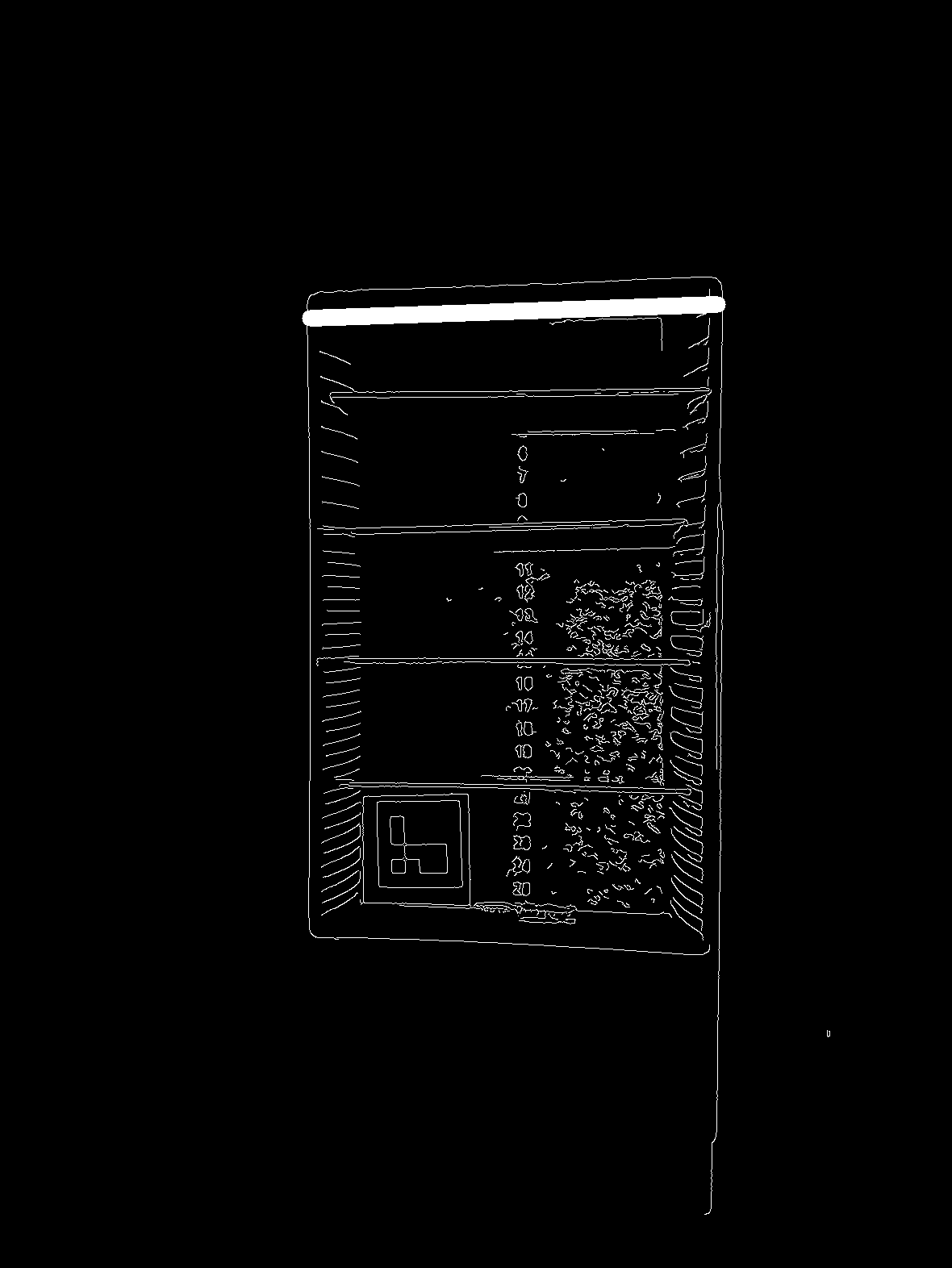

- Computer vision for slide identification

- Precise pick-and-place in 3D space

- Robust operation for continuous tasks

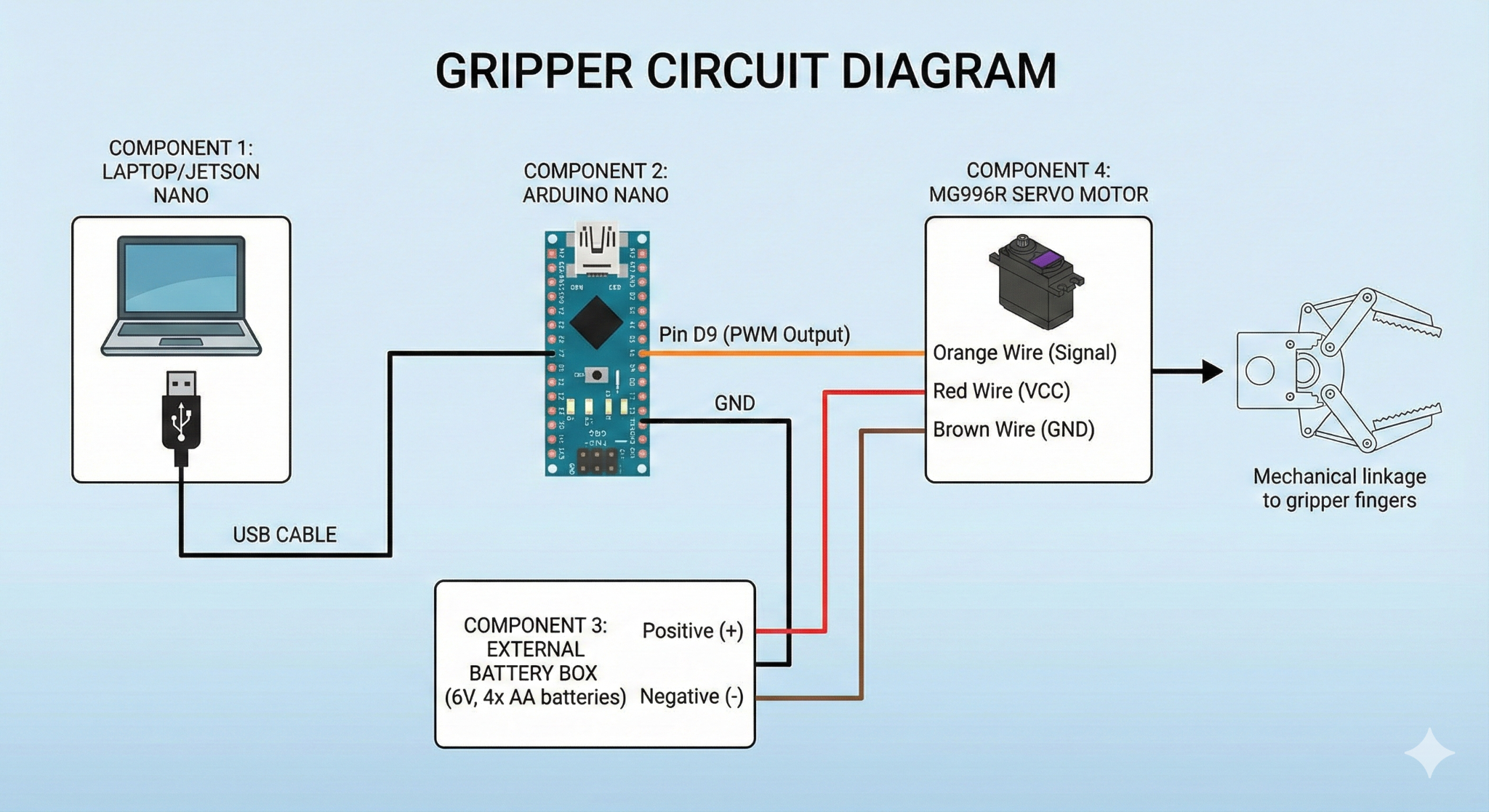

Our approach combines computer vision, motion planning, and custom hardware into a complete ROS2 system. We detect slide poses, compute precise trajectories, and execute robust pick-and-place actions with a custom adapter and gripper, targeting repeatable performance in real-world laboratory settings.